Or, how Rule 34 of the interwebs still holds true for Engineering blogs.

I guess the words of the week are Invagination and Exphallation. Two words that sound dirty, kinda are, but really aren't. I haven't been able to find them on standard dictionary websites, but MIT professors say them all the time, as do some of my manufacturing textbooks, so they must exist.

To put it simply, this picture depicts an invagination, which you can imagine is a hole of some sort, and an exphallation, or protrusion. Their geometry isn't perfect, but when you snap the exphallation into the invagination, the invagination's thin walls move to receive the exphallation and hold it together.

*shudder* I feel dirty. Let me explain:

I'm doing some undergraduate research this summer! I am working at the Laboratory for Electromagnetic and Electronic Systems (LEES) as a mechanical designer. Our overall project is the design of various aspects of a potential new class for EECS freshman to get their feet wet.

Particularly, we're designing a NerdKit, which is a container of sorts with some circuit prototyping elements such as breadboards, power supplies, a function generator... It should be compact, full-featured, and be able to fit in a student's backpack. Also, the students make it themselves using a manufacturing technique called Thermoforming, the process of draping melted plastic over a mold and using vacuum suction beneath the mold to eliminate all air pockets, reproducing the object defined in the mold.

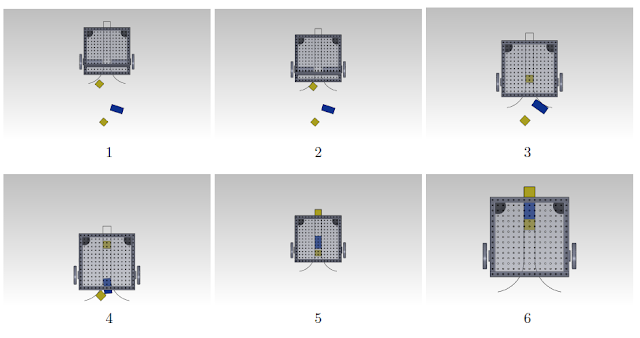

To start things off, my coworker and I are prototyping the more intricate design aspects: fastening and hinges. Here are the molds we made out of High Density Polyurethane, copying the design of an apple container we had lying around.

Some thermoforming process pics and a vacuum fail... I guess the words of the week are Invagination and Exphallation. Two words that sound dirty, kinda are, but really aren't. I haven't been able to find them on standard dictionary websites, but MIT professors say them all the time, as do some of my manufacturing textbooks, so they must exist.

To put it simply, this picture depicts an invagination, which you can imagine is a hole of some sort, and an exphallation, or protrusion. Their geometry isn't perfect, but when you snap the exphallation into the invagination, the invagination's thin walls move to receive the exphallation and hold it together.

*shudder* I feel dirty. Let me explain:

I'm doing some undergraduate research this summer! I am working at the Laboratory for Electromagnetic and Electronic Systems (LEES) as a mechanical designer. Our overall project is the design of various aspects of a potential new class for EECS freshman to get their feet wet.

Particularly, we're designing a NerdKit, which is a container of sorts with some circuit prototyping elements such as breadboards, power supplies, a function generator... It should be compact, full-featured, and be able to fit in a student's backpack. Also, the students make it themselves using a manufacturing technique called Thermoforming, the process of draping melted plastic over a mold and using vacuum suction beneath the mold to eliminate all air pockets, reproducing the object defined in the mold.

To start things off, my coworker and I are prototyping the more intricate design aspects: fastening and hinges. Here are the molds we made out of High Density Polyurethane, copying the design of an apple container we had lying around.

And the thermoformed pieces! The insert fit into the container like a charm, though it took some modifications to get my hinge snapfit thing working right...

To get suction in hardToReach spaces, I had to drill some holes in the mold. This allowed the plastic to form closer to the mold, resulting in...

This working snapfit: much better! That invagination and exphallation look so happy together :3. The hinge and snap fit took a few iterations to get right, but worked well! Issue with this design, however, is that the part is made facing up, and closes like a book upon removal from the machine. The parts I'm making for the case of the Nerdkit are all made facing down, as if the book were placed with the cover up. The hinge that worked for the up-facing part will not work for the final product, so I need to design a better hinge that can take a beating and will work for the down-facing final product.

Good thing I have all summer!

Unfortunately the mold broke as I was removing it from the part, probably because it was too thin: HDP is expensive shit so we were frugal with out molds. Next time I won't be so cheap, and will use a urethane release spray.

My next endeavor will be learning to 2.5D CNC mill my molds of some other test hinges. I feel most of product design is iterating through your ideations until you find a prototype that works... gives me new respect for product designers. (PS: Watch the documentary Objectified, it's awesome.)