The Final Challenge had the following premise: An autonomous rover lands on Mars with the intention of building a predetermined structure for future human colonists to use.

Within the scope of the class, however, our robot simply had to navigate this maze, collect those blocks, and build some kind of structure in any way possible. Easy enough, right? We'll just make this thing:

The only exception (to my dismay) was no flamethrowers, saws, hammers, or other devices that can destroy or otherwise disassemble the environment may be used. Oh well...

The freedom for the robot's design allowed for each team to come up with a unique approach to solving the problem. Historically, most teams opted to use as much of the code from previous labs as possible, which involved active manipulation for collecting blocks, and a passive construction method of funneling the blocks into a structure on board the robot that could be placed in the environment.

My team, Team VI: The Sentinels, opted to go for the inverse, which was passive collection and active construction. We went for an (almost) entirely open-loop approach, using only the provided map and block locations, with killer wheel encoder odometry, for navigation.

See, our robot has no vision, bump, or distance sensing. Before we first demonstrated successful navigation, members of other teams started talking smack about our Sentinel, calling the poor thing "blind".

And it's certainly blind. Not one camera or sensor on that thing. We implemented Computer Vision with both a webcam and a Kinect, but in the end it wasn't really necessary because of our dead-accurate encoder odometry.

(We experimented with mounting two optical mice underneath the robot and using their readings as odometry. We conducted a test to see which was more accurate, the mice or the wheel encoders. Our robot started at one corner of a 1-meter square, and we gave it 10 waypoints, each at a random corner of that square. After multiple tests, we saw, to our surprise, that the wheel encoders consistently got it to within 2 centimeters of its final waypoint, while the optical mice always left it greater than 5 centimeters from that final waypoint. Clearly, we had some kick-ass encoders.)

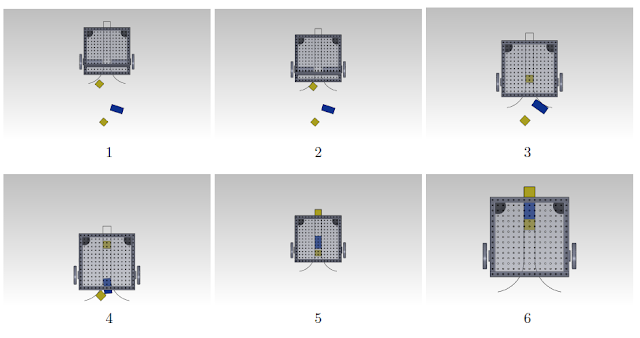

Our robot robot navigated the maze, driving over blocks which would be collected by a bent sheet aluminum funnel suspended underneath.

As the blocks were collected, they slid along the funnel and into the space underneath that was sized such that the blocks would interlock and form a straight line.

Once we collected enough blocks, the robot drove to a spacious area of the maze and drove backwards to deposit the blocks in front of it.

And then construction began using only the provided manipulator which was actuated by hobby servos which were too weak for the job, prone to overheating, and proved to be nonlinear as a result. But it worked well enough:

We somehow got it working. First with my spearheading a teammate's bet that I couldn't get it to stack a 5 block tower. (If I got it working, I'd get $100.00. If I didn't, I got him a sandwich. A reasonable bet.) I got it working once, but with inconsistent results, winning the bet.

Then with hours of fine-tuning that sounded a lot like the above video does at 03:15

More fine tuning led to consistent behavior, 5 minutes of fame on the MIT EECS facebook and the 6.141 website (and an A). Above is footage of it stacking a tower. (Including Neil waking up in disbelief after sleeping on the ground for a couple hours. The life of an MIT student sure is a glamorous one.)

And footage of it completing an entire Challenge run. Complete with Will talking about my old dormitory, known in the 80s as "McKegger", and myself rambling on about all the nerdy stuff I learned (and didn't) in the class.

This robot, like most 6.141 bots of the past, actively collected blocks using the manipulator and dumped them into a container in the back where they were passively assembled into a structure. The real innovation made by this team was the design of the back-mounted hopper, and the type of structure it left in its wake.

The initial blocks fall into place on the bottom, oriented 45 degrees up from the ground and aligned side-by-side. The following blocks fill in the gaps in between, stabilizing the structure, which is only supported by the corners of the bottom blocks.

When time is up, the hopper rotates back like a dump truck until the blocks contact with the ground. The robot then pulls away, leaving this arch-like structure.

The class was a blast and I learned a ton. Unfortunately, we don't get to keep the robots because they get disassembled for next year.

But that's okay, more robots are coming...

(Yes, I got my hands on one of these, too)

No comments:

Post a Comment